People: Laura Petrich; Martin

Jagersand

Human assistive robotics can help the elderly and those with disabilities with

Activities of Daily Living (ADL). Robotics researchers approach this bottom-up publishing on methods

for control of different types of movements. Health research on the other hand focuses on hospital

clinical assessment and rehabilitation using the International Classification of Functioning (ICF),

leaving arguably important differences between each domain. In particular, little is known

quantitatively on what ADLs humans perform in their ordinary environment - at home, work etc. This

information can guide robotics development and prioritize what technology to deploy for in-home

assistive robotics. This study targets several large lifelogging databases, where we compute (i) ADL

task frequency from long-term low sampling frequency video and Internet of Things (IoT) sensor data,

and (ii) short term arm and hand movement data from 30 fps video data of domestic tasks. Robotics and

health care have different terms and taxonomies for representing tasks and motions. From the

quantitative ADL task and ICF motion data we derive and discuss a robotics-relevant taxonomy in

attempts to ameliorate these taxonomic differences.

Haptic

Teleoperation demo at RiseX 2025!

Haptic

Teleoperation demo at RiseX 2025!

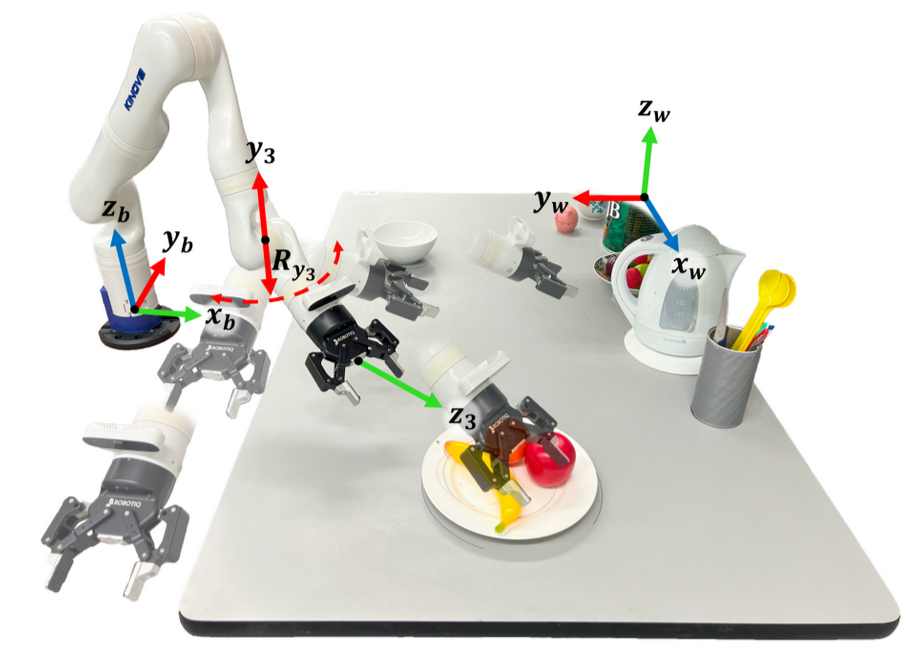

Point and Go: Intuitive Reference Frame Reallocation in Mode

Point and Go: Intuitive Reference Frame Reallocation in Mode  Robot Manipulation through Image Segmentation and Geometric Constraints

Robot Manipulation through Image Segmentation and Geometric Constraints

Back2Future-SIM: Interactable

Immersive Virtual World For Teleoperation

Back2Future-SIM: Interactable

Immersive Virtual World For Teleoperation Visual Servoing Based Path

Following Controller

Visual Servoing Based Path

Following Controller Actuation Subspace

Prediction with Neural Householder Transforms

Actuation Subspace

Prediction with Neural Householder Transforms Understanding Manipulation Contexts

by Vision and Language for Robotic Vision

Understanding Manipulation Contexts

by Vision and Language for Robotic Vision Learning Geometry

from Vision for Robotic Manipulation

Learning Geometry

from Vision for Robotic Manipulation 1

1 2

2 3

3 4

4 5

5 6

6 7

7 8

8