|

Communication mechanisms for cooperative human-robot manipulation tasks in unstructured environments Robot arm manipulation has revolutionized manufacturing through autonomous, repetitive high quality assembly of from mobile phones to cars. However, robot arms and hands have had little impact outside repetitive tasks in engineered environments. One key reason is that current robots don't have the capacity to interact well with humans in unstructured human environments. Our research focus on communication mechanisms for human-robot manipulation interaction. We most immediately think about human communication in terms of spoken or written language. However, much of our situated daily interactions involve non-verbal communication about spatial information and tasks. Humans use pointing and gestures to communicate spatial information and motions when collaboratively solving manipulation tasks. Our aim is to develop such deictic gesture interfaces for Human-Robot Interfaces using computer vision to analyze both the gestures of the human and the configuration of the environment. Bringing robotic systems to human environments is still a major challenge. User studies, human factor engineering and several iterations in the system refinement are needed. Below we present work already performed towards our research goal.

Visual Pointing Gestures for Bi-directional HRI in a Pick-and-Place Task SEPO: Selecting by Pointing as an Intuitive Human-Robot Command Interface VIBI: Assistive Vision-Based Interface for Robot Manipulation Interactive Teleoperation Interface for Semi-Autonomous Control of Robot Arms Deictic visual pointing to instruct a robot arm and hand |

|

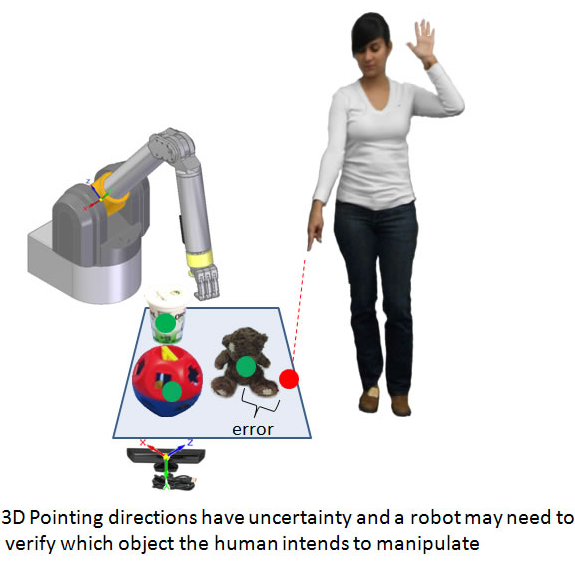

Visual Pointing Gestures for Bi-directional HRI in a Pick-and-Place Task This research explores visual pointing gestures for two-way nonverbal communication for interacting with a robot arm. Such non-verbal instruction is common when humans communicate spatial directions and actions while collaboratively performing manipulation tasks. Using 3D RGBD we compare human-human and human-robot interaction for solving a pick-and-place task. In the human-human interaction we study both pointing and other types of gestures, performed by humans in a collaborative task. For the human-robot interaction we design a system that allows the user to interact with a 7DOF robot arm using gestures for selecting, picking and dropping objects at different locations. Bi-directional confirmation gestures allow the robot (or human) to verify that the right object is selected. We perform experiments where 8 human subjects collaborate with the robot to manipulate ordinary household objects on a tabletop. Without confirmation feedback selection accuracy was 70-90% for both humans and the robot. With feedback through confirmation gestures both humans and our vision-robotic system could perform the task accurately every time (100%). A demonstration of our interaction can be seen here.

Making Pizza with my robotWe envision that our system can be used in different real life scenarios, e.g., a robot can work behind a counter taking the role of a shopkeeper; a client points to a particular object and by using confirmation feedback the robot will reach the desired product. In another situation a robot can be used as a chef at a hotel breakfast buffet; the client points to different ingredients to include in his omelette. In a metal workshop a robot can assist a welder by picking and placing parts. The welder only has to point to them, avoiding heavy weight manipulation and extreme temperatures. To bring our study to a practical situation we made our robot capable of preparing pizza by gesturing with a human. A demonstration of our interaction can be seen here. Camilo Perez Quintero, Martin Jagersand. "Robot Making Pizza". 3rd place in the IEEE Robotics and Automation Society (RAS) SAC Video contest, May 2015.. |

|

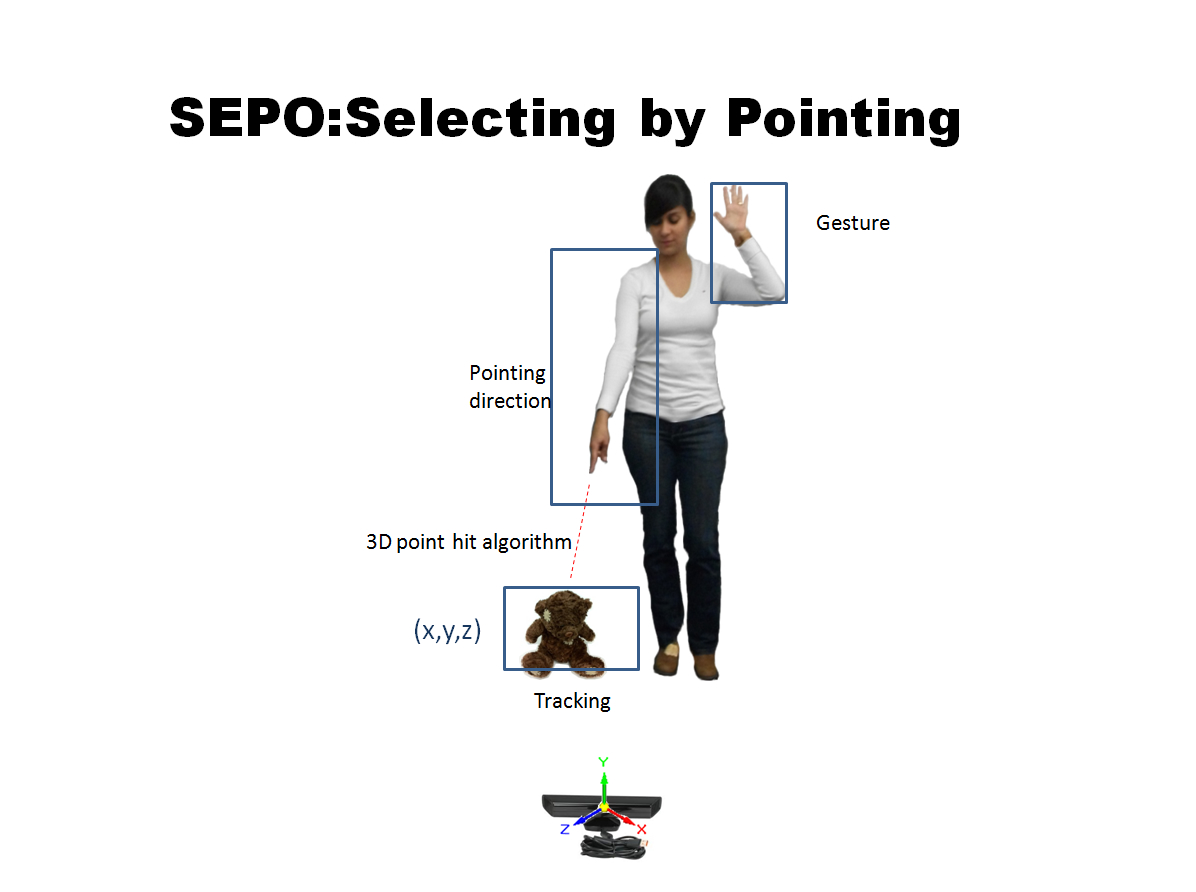

SEPO: Selecting by Pointing as an Intuitive Human-Robot Command Interface Pointing to indicate direction or position is one of the intuitive communication mechanisms used by humans in all life stages. Our aim is to develop a natural human-robot command interface using pointing gestures for human-robot interaction (HRI). We propose an interface based on the Kinect sensor for selecting by pointing (SEPO) in a 3D real-world situation, where the user points to a target object or location and the interface returns the 3D position coordinates of the target. Through our interface we perform three experiments to study precision and accuracy of human pointing in typical household scenarios: pointing to a “wall”, pointing to a “table”, and pointing to a “floor”. Our results prove that the proposed SEPO interface enables users to point and select objects with an average 3D position accuracy of 9.6 cm in household situations. A demonstration of the SEPO interface can be seen here. Camilo Perez Quintero, Romeo Tatsambon Fomena, Azad Shademan, Nina Wolleb, Travis Dick, Martin Jägersand: SEPO: Selecting by pointing as an intuitive human-robot command interface. IEEE ICRA 2013: 1166-1171.

|

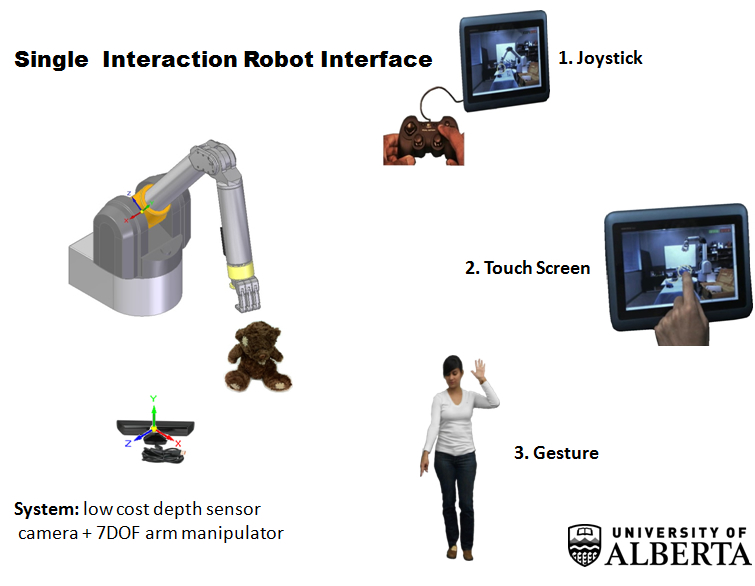

VIBI: Assistive Vision-Based Interface for Robot ManipulationUpper-body disabled people can benefit from the use of robot-arms to perform every day tasks. However, the adoption of this kind of technology has been limited by the complexity of robot manipulation tasks and the difficulty in controlling a multiple-DOF arm using a joystick or a similar device. Motivated by this need, we present an assistive visionbased interface for robot manipulation. Our proposal is to replace the direct joystick motor control interface present in a commercial wheelchair mounted assistive robotic manipulator with a human-robot interface based on visual selection. The scene in front of the robot is shown on a screen, and the user can then select an object with our novel grasping interface. We develop computer vision and motion control methods that drive the robot to that object. Our aim is not to replace user control, but instead augment user capabilities through our system with different levels of semi-autonomy, while leaving the user with a sense that he/she is in control of the task. Two disabled pilot users, were involved at different stages of our research. The first pilot user during the interface design along with rehab experts. The second performed user studies along with an 8 subject control group to evaluate our interface. Our system reduces robot instruction from a 6-DOF task in continuous space to either a 2-DOF pointing task or a discrete selection task among objects detected by computer vision. A demonstration of our interface can be seen here. Camilo Perez Quintero, Oscar Ramirez, Martin Jägersand: VIBI: Assistive vision-based interface for robot manipulation. IEEE ICRA 2015: 4458-4463.

|

|

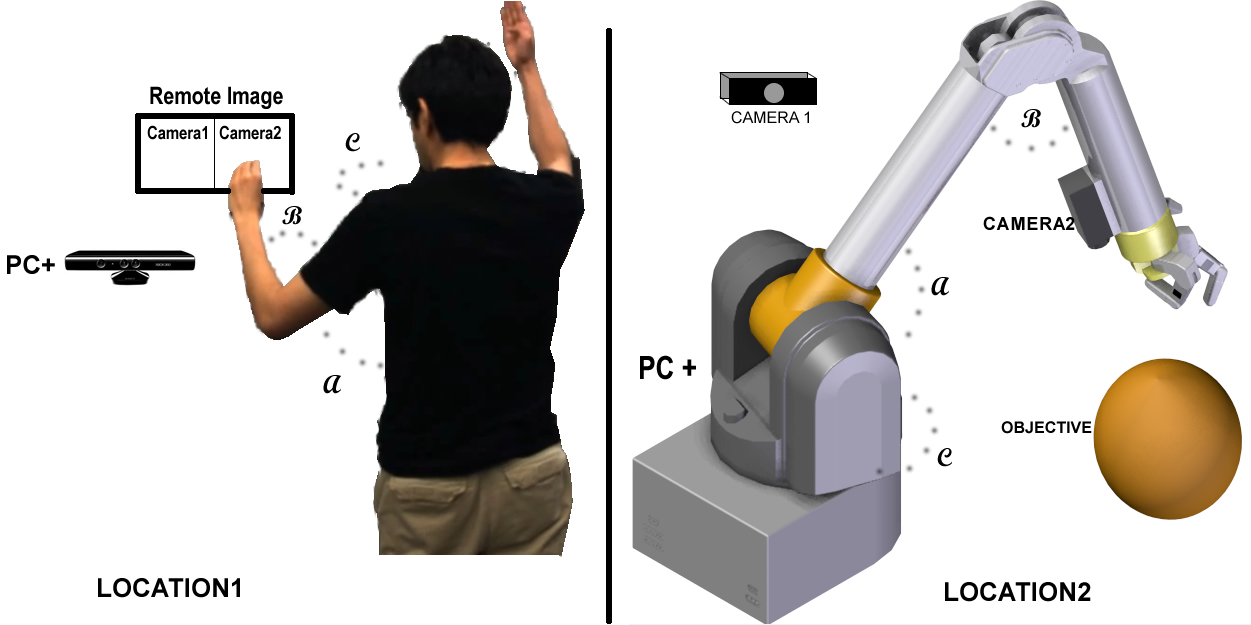

Interactive Teleoperation Interface for Semi-Autonomous Control of Robot Arms

We propose and develop an interactive semi-autonomous control of robot arms. Our system consist on two interactions: (1) A user can naturally control a robot arm by a direct linkage to the arm motion from the tracked human skeleton.(2) An autonomous image-based visual servoing routine can be trigger for precise positioning. Coarse motions are executed by human teleoperation and fine motions by image-based visual servoing. A successful application of our proposed interaction is presented for a WAM arm equipped with an eye-in-hand camera. A demonstration of our interface can be seen here. Camilo Perez Quintero, Romeo Tatsambon Fomena, Azad Shademan, Oscar Ramirez, Martin Jägersand: Interactive Teleoperation Interface for Semi-autonomous Control of Robot Arms. Canadian Conference on Computer and Robot Vision (CRV) 2014: 357-363.

|

|

Deictic visual pointing to instruct a robot arm and hand Good Human-Robot Interfaces (HRI) will be important when robots are to solve unstructured tasks. Unlike manufacturing where the robot repeats a simple task millions of times, robots acting in unstructured natural environments have to be able to do many different tasks. It is crucial that a human can quickly tell the robot what to do, and then immediately interactively verify that the robot performed the task correctly. Un-like conventional robot programming, that is generally performed before task execution, this new deictic visual pointing leads to an on-line HRI where a human interactively works with a robot, instructing the robot one task at a time by gesturing and pointing to objects and locations. Such an interface is quite natural to humans. This is how we interact with other humans when we teach them a task. We have prototyped three types of visual interfaces in this video:

|