Predictive display for a remote operated robot |

| Martin Jagersand Adam Rachmielowski, Dana Cobzas, Azad Shademan, Neil Birkbeck, |

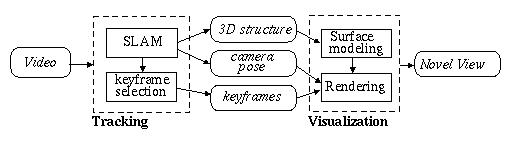

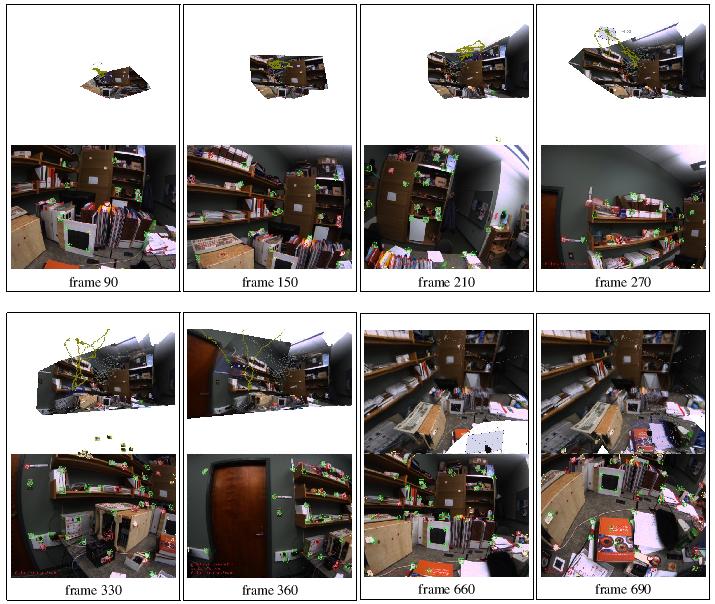

DescriptionA crucial factor in tele-robotics is the latency and quality of visual feedback to the operator. It has been shown that as little as 0.4 seconds latency severely degrades performance. Yet combined delays from distance and network switches practically add up into seconds for on-earth or low-orbit space tele-operation. We are working on predictive display systems that render a visualization of a scene model acquired on-line from the desired operator position. For achieving the desired rendering speed, rendering is implemented on the graphics card using hardware acceleration.A predictive display system should support on-line robot (camera) tracking and immediate rendering at the operator site of robot environment. We have three proposed predictive display systems. In oldest two the modeling is done off-line while the most recent one supports on-line modeling. Our main predictive display projects are (starting with the most recent; the camera tracking method and model type are listed in brackets): 1. Monocular SLAM with realtime modeling and visualization [SLAM -- triangulated sparse geometry] 2. SSD tracking and predictive display [3D SSD tracking --- sparse geometry with dynamic texture] 3. Off-line panoramic model for predictive display [localization --- cylindrical panorama with depth] Monocular SLAM with realtime modeling and visualization Recently we proposed a video-based system that constructs and visualizes a coarse graphics model in real-time and automatically saves a set of images appropriate for later off-line dense reconstruction. Our implementation uses real-time monocular SLAM to compute and continuously keep extending a 3D model, augments this with key-frame selection for storage, surface modeling, and on-line rendering of the current structure textured from a selection of key-frames. This rendering gives an immediate and intuitive view of the scene.

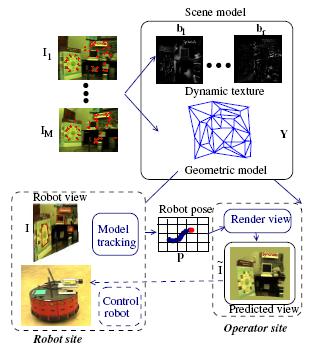

SSD tracking and predictive display As an intermediate stage, we have designed a system that uses a geometric and appearance model that is captured in a training phase using structure-from-motion (SFM) by an uncalibrated camera. The sparse geometric model resulting from SFM is augmented with an appearance based dynamic texture representing fine scale detail, with the property to modulate a time varying view dependent texture to correct the sparse geometric model. After about 100 frames, the geometric model is integrated into a registration-based tracking algorithm that allows stable tracking of full 3D pose of the robot. The composite graphics model is stored at the operator site and used to provided immediate visual feedback in response to operator commands. More about the 3D SSD tracking More about the dynamic texture modeling

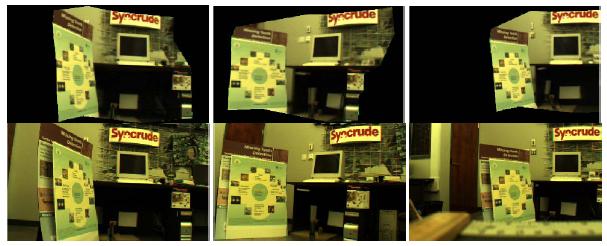

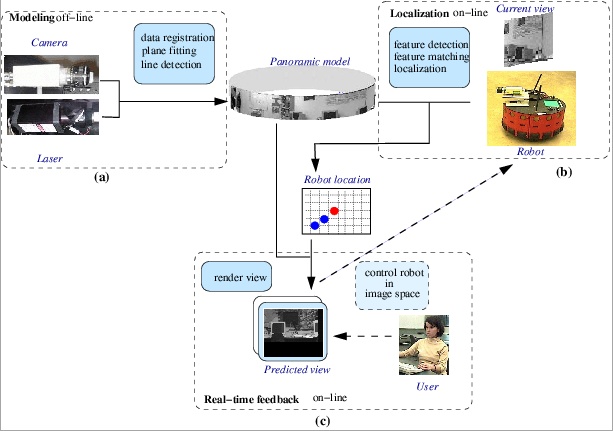

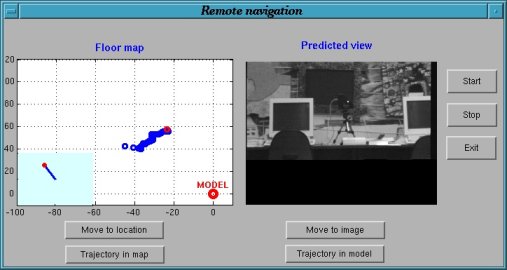

Off-line panoramic model for predictive display The first predictive display system is using a panoramic image mosaic augmented with depth information that is built using cameras and range sensors. To demonstrate the applicability of the proposed model in robot navigation, we have implemented a localization algorithm uses an image taken by the robot camera and matches it with the model in order to find the current robot location. As the model has both range and appearance information, it can be used to synthesize novel images as a response to the operator commands.

|

Demos | Tracking with predictive display |

ReferencesRachmielowski, A., Birkbeck, N., Jagersand, M., Cobzas, D., Realtime visualization of monocular data for 3D reconstruction, Canadian Conference on Computer and Robot Vision (CRV) 2008 - submission Cobzas, D., Jagersand, M. and Zhang, H., A Panoramic Model for Remote Robot Environment Mapping and Predictive Display, International Journal of Robotics and Automation, 20(1):25-34, 2005 Cobzas, D. and Jagersand M. Tracking and Predictive Display for a Remote Operated Robot using Uncalibrated Video, IEEE Conference on Robotics and Automation (ICRA) 2005, pp1859-1864 Best vision paper award Cobzas, D., Jagersand, M. and Zhang, H. A Panoramic Model for Robot Predictive Display, Vision Interface 2003, pp111-118, Best student paper award Yerex, K., Cobzas, D. and Jagersand, M. Predictive Display Models for Tele-Manipulation from Uncalibrated Camera-capture of Scene Geometry and Appearance, IEEE Conference on Robotics and Automation (ICRA) 2003, pp2812-2817 |