In the following pages you will find an informal, but detailed overview of the internal workings and motivation of the system.

Capturing and modeling real-world scenes and objects from video alone

is a long standing problem in computer vision and graphics. Unlike

active methods (such as laser range finders), video-based methods

allow quick and non-intrusive capture using inexpensive commodity

cameras (e.g. web cams, digital cameras or camcorders) and a consumer

PC is sufficient for the processing and rendering.

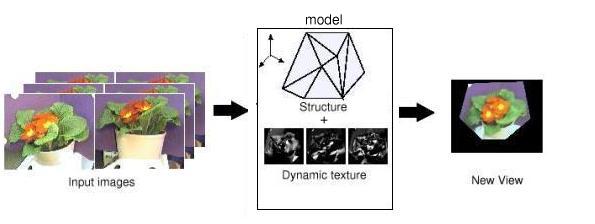

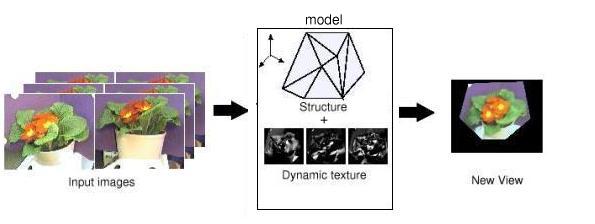

In our method uncalibrated video from different views of an object or

scene are compiled into a geometry and texture model. The geometric

modeling is based on recent results in non-Euclidean multiview

geometry, and the texture is represented using a novel texture basis

capturing fine scale geometric and light variation over the

surface. During rendering view dependent textures are blended from

this basis and warped onto the geometric model to generate new views.

A visual breakdown of the process.

On this web site you can find both details on the theory as well as

downloads of a working system and some sample models. The system is

capable of frame rate rendering by performing the calculation

intensive code either (depending on your installed HW) on the CPU

through MMX SIMD extensions on the graphics card using NVIDIA register

combiners. Rendering and viewing of models is easy to set up and use

on either Windows or Linux PCs. The software for capturing and

processing video into models currently only runs under Linux and is

somewhat more complex to install, but it is doable for someone with

intermediate knowledge on how to configure Linux for digital video.