sliding tile puzzle

sliding tile puzzle

Martin Gardner SciAm Aug 1957 Sam Loyd, America's Greatest Puzzlist

15-puzzle dates from 1880, inventor Chapman, not Sam Loyd

MG book 5 Klein bottle, op-art and sliding block puzzles chapter 20

we consider sliding tile puzzle with various dimensions

15 puzzle has 4 rows 4 columns

assume at least 2 rows (why?), similarly at least 2 columns

rip paper, make cells 1 2 3 4 5

goal state 1 2 3

4 5

solve from this? 5 4 3

2 1

solve from this? 4 2 5

1 3

solve from this? 4 2 5

3 1

2 2 sliding tile puzzle

observe: on 2x2, a slide is a rotation

goal state a b

c .

solvable

a b a . . a c a c a c .

c . c b c b . b b . b a

. c b c b c b . . b a b

b a . a a . a c a c . c

not solvable

a c a . . a b a b a b .

b . b c b c . c c . c a

. b c b c b c . . c a c

c a . a a . a b a b . b

solvable?

for r,c each at least 2, for any fixed final state, exactly .5 of the states can be transformed into the final state (proof ?)

call a state solvable if it can be transformed into the row-by-row sorted state (with blank last)

so .5 of all states are solvable

a parity check tells whether an arbitrary state is solvable

the number of inversions of a sliding tile position is the number of tile pairs x,y such that (when the position is written row-by-row as a permutation) x appears before y but x > y

position permutation inversions

3 1 4 (3 1 4 2 5 7 6 8) 3 out of order with 1, 2 so 2

2 5 7 1 ok with the rest of the tiles

_ 6 8 4 out of order with 2, so 1 more

2 ok with the rest ...

5 ok with the rest ...

7 out of order with 6, so 1 more

so position has a total of 4 inversions

| column number | solvability condition |

| odd | even number inversions |

| even | blank's row-from-bottom parity != inversions parity |

5 4 3 odd number cols, 4+3+2+1=10 inversions, solvable 2 1 _ 7 6 5 _ even number cols, 6+5+4+3+2+1=21 inversions, 4 3 2 1 blank in row 2 from bottom, solvable 7 6 5 4 even number cols, 6+5+4+3+2+1=21 inversions, 3 2 1 _ blank in row 1 from bottom, unsolvable

search sliding tile space

children ordered by blank-mv: U D L R

235

41_

U L

/ \

/ \

/ \

23_ 235

415 4_1

L U L

| / \

| / \

2_3 2_5 235

415 431 _41

D L L R U

/ \ / \ |

/ \ / \ |

213 _23 _25 25_ _35

4_5 415 431 431 241

... ... ... ... ...

exhaustive search

search algorithms so far random walk, bfs, dfs

each exhaustive

pro: will solve problem

con: maybe take too long

which to use?

before choosing, estimate state space size

(r,c) puzzle has (r*c)! states (why?)

state space adjacency graph has 2 components

solvable states, (rc)!/2 nodes

unsolvable states, (rc)!/2 nodes

so starting from a fixed state, wc (worst case) examine (rc)!/2 nodes

| dimension | number of states |

| 2 2 | 4! = 24 |

| 2 3 | 6! = 720 |

| 2 4 | 8! = 40 320 |

| 3 3 | 9! = 362 880 |

| 2 5 | 10! = 3.6 e 6 |

| 2 6 3 4 | 12! = 4.8 e 8 |

| 2 7 | 14! = .87 e 11 |

| 3 5 | 15! = 1.3 e 12 |

| 4 4 | 16! = 2.1 e 13 |

exhaustive search suffice?

random walk much slower than bfs, dfs, so ignore for this problem

bfs and dfs each take time proportional to the number of (nodes and) edges in the underlying graph

e.g. if on a graph with 1 000 000 edges bfs takes 1 hour, then on a graph with 2 000 000 edges we expect it to take about 2 hours

the sliding-tile puzzle state transition graph (nodes are states, 2 nodes are adjacent if we can slide between them) has average degree (number of neighbors) under 4, so a constant

so bfs runtime proportional to number of states

so bfs or iterative dfs (recursive dfs will probably have stack size too large) should work on 3x3

might also work for 4x4

for 4x4 there is another algorithm (A*) that works well, like bfs finds a shortest solution

for 4x4, if we do not care about shortest solution, we can use above special-purpose algorithm

because bfs finds a shortest solution, let us try a bfs approach rather than dfs

solving slide tile with bfs

in maze traversal

we consider adjacency graph of cells

use bfs to traverse this graph

what is the associated graph with sliding tile puzzle?

each node in graph is a sliding tile state

two nodes are adjacent if can single-slide between states

with this graph, we just use bfs as before

to implement sliding tile bfs in python

how will we record, for each state, whether we have seen it?

answer: use python dictionary of parents

each time we see a new state, add it to the dictionary

we have seen a state iff it is in the dictionary

-

my desktop: stile_search.py examines 70 000 states/s

3 3 no problem

4 4 intractable

since bfs, solution found is shortest

simple/stile/stile_search.py, input unsolvable 3 3 no solution found 181440 iterations 2.5 seconds 72900 iterations/sec nodes by level 0 1 1 2 2 4 3 8 4 16 5 20 6 39 7 62 8 116 9 152 10 286 11 396 12 748 13 1024 14 1893 15 2512 16 4485 17 5638 18 9529 19 10878 20 16993 21 17110 22 23952 23 20224 24 24047 25 15578 26 14560 27 6274 28 3910 29 760 30 221 31 2 32 0

we can move from any solvable position to solution

suppose you have solvable positions p1 and p2

you can move from p1 to final (all-sorted) position)

you can move from p2 to final position

so you can move from final position to p2

so you can move from p1 to final to p2

so you can move from p1 to p2

not hard to show that same holds for any two unsolvable positions q1 and q2 (use this trick. take any solvable (resp. unsolvable) position, and exchange labels on two largest tiles: new position is unsolvable (solvable))

so sliding stile search space graph has exactly two components

estimating next runtime

how can we predict runtime on larger size inputs?

wc runtime for breadth-first-search traversal roughly proportional to number of edges in graph

wc: no solution, whole component searched

e.g. modify stile_search.py: comment out print statements

e.g. st.33.4no: 181440 iterations 2.2 sec 82600 itn/sec

assume we know 3x3 wc runtime: we can then estimate other wc runtimes

use 3x3 runtime data to estimate 2x5 runtime data

number of edges in 3x3 search space graph?

4/9 * 9! = 4*8! positions have empty tile in corner, so 2 nbrs

4/9 * 9! = 4*8! positions have empty tile middle-edge, so 3 nbrs

1/9 * 9! = 1*8! positions have empty tile in center, so 4 nbrs

average degree 2*4/9 + 3*4/9 + 4*1/9 = 24/9 = 8/3

sum of neighbours, over all nodes 8/3 * 9!

sum of neighbours in a graph is 2 times number of edges (each edge appears 2 times as a neighbour)

so number of edges is 1/2 sum of neighbours

number of edges in 3x3 search space graph is .5 * 8/3 * 9!

number of edges in 2x5 search space graph?

average degree 13/5 (exercise)

so expect wc 2x5 runtime (13/5 * 10!) / (8/3 * 9!) = 26*3/8 = 9.75 times 3x3 wc

1814400 iterations 21.5 seconds 84400 itn/s

experimental ratio close to what was expected,

how long to solve 4x4 tile puzzle?

average degree 3 (exercise)

so expect wc 4x4 runtime to be about

(16! * 3 ) / (9! * 2.67) = 64 864 800 times as long as 3x3 case

about 1580 days … too long!

humans easily solve 4x4 STP in few minutes: how?

idea: best-first search

special purpose algorithm

special purpose algorithms for sliding tile exist

no search: repeatedly find next move

need to prove correctness

usually, solution not shortest

an inductive baby-steps approach

break problem into baby steps (achievable subproblems)

(cultural reference: movie What About Bob?)

e.g. 2x3 puzzle?

subproblem A place top row

subproblem B without touching top row, finish puzzle

e.g. 2x3 puzzle (different method)

subproblem X place leftmost column

subproblem Y without touching that column, finish puzzle

start * - 2

3 * 1

post A 1 2 3

* * -

post B 1 2 3 (or discover unsolvable)

4 5 -

........................................

other method

post X 1 * *

4 * -

post Y 1 2 3 (or discover unsolvable)

4 5 -

step A 1, 2, 3, blank => top two rows * * 2 3 - 2 3 * * ==> * 1 * * 1 - * * * step B (use only top two rows) 1, 2, 3, blank => top row 3 - 2 1 2 3 * 1 * ==> - * * * * * * * * step C (on bottom two rows) use 2x3 method to finish 1 2 3 1 2 3 1 2 3 - 4 * ==> 4 5 6 => 4 5 6 or unsolvable 6 * 5 * - * 7 8 -

are these methods guaranteed to work?

will work if you always leave at least 2 rows and 2 columns

what can go wrong if you don't?

e.g. 2x4, what can happen if you first place row 1, and then place row 2?

e.g. 3x3, what can happen if you first place 1st,2nd cells of row 1, and then try to place 3rd cell?

see 15puzzle.py in class github directory stile

heuristic search

heuristic search is guided search

a heuristic function is used to decide which node of the search tree to explore next

Dijkstra's single source shortest path algm

solves single source shortest path on weighted graphs

weighted graph each edge has a weight (or cost, or length)

greedy: at each step remove fringe node with min distance-so-far

optimal on graphs (or acyclic digraphs) with non-negative edge weights: d-s-f of fringe node with min d-s-f is length of shortest path from start to that node

efficient Dijkstra implementation uses priority queue: PQ.remove() returns node with max priority (here, min distance-so-far)

A*

solves single-source single-target problem

A* uses heuristic to estimate remaining dist to target

if heuristic always less/equal actual cost, then A* finds shortest path

usually considers fewer nodes than Dijkstra

fringe = PQ()

fringe.add(start, 0)

parent, cost, done = {}, {}, []

parent[start], cost[start] = None, 0

#cost[v] will be min dist-so-far from start to v

#if heuristic(target, v) is always less/equal than min dist(target,v),

#then final cost[v] will be min dist from start to v

while not fringe.empty():

current = fringe.remove() # min priority

done.add(current)

if current == target: break

for next in nbrs(current):

if next not in done:

new_cost = cost[current] + wt(current, next)

if next not in cost or new_cost < cost[next]:

cost[next] = new_cost

priority = new_cost + heuristic(target, next)

fringe.add(next, priority)

parent[next] = current

heuristic: straight line dist to B

(easy to compute using latitute/longitude coordinates)

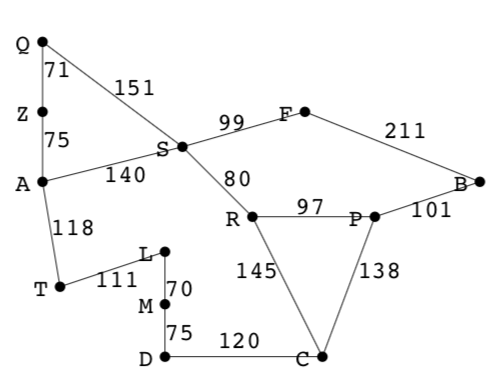

B A C D F L M P Q R S T Z

0 366 160 242 176 244 241 100 380 193 253 329 374

initialize: (other costs initially infinite)

A

cost 0

priority 366

* current A cost 0

---------------------------------

A nbrs:

S newcost 0 + 140

T newcost 0 + 118

Z newcost 0 + 75

S T Z

cost 140 118 75

heur 253 329 374

pri 393 447 449

* current S cost 140

--------------------------------

S nbrs:

A done

F newcost 140 + 99 239 update

Q newcost 140 + 151 291 update

R newcost 140 + 80 220 update

S T Z F Q R

cost 140 118 75 239 291 220

heur 253 329 374 176 380 193

pri 393 447 449 415 671 413

* current R cost 220

--------------------------------

... exercise: do the next step ...

... check your answer with /stile/astar.py ...

A* sliding tile

how can we use A* for sliding tile problem?

usual state space adjacency graph

node: sliding tile state (position)

edge: a pair of states, can single-slide from one to other

cost of a path: sum of number of edges from start (unit-cost weights)

choice of heuristic function:

number of misplaced tiles

sum, over all tiles, of Manhattan distance (a.k.a. taxicab distance) from current to final location

run simple/play_stile.py to see these heuristic values

each of these heuristic function always less/equal to number of moves to solve, so with A* each yields shortest solution

to execute A* sliding tile in our code base

install VM and follow instructions, or download code from github, run make in /lib

run bin/gpa_puzzles-cli -h

run bin/gpa-puzzles-cli sliding_tile A*

now type help

position misplaced score 6 Manhattan score 12 3 5 2 tile 1 2 3 4 5 6 7 8 1 2 3 4 5 6 7 8 4 6 7 misplaced x x x x x x 4+1+2+0+1+1+3+0 - 8 1

how humans solve sliding tile

humans and computers often solve problems differently

solve 2x3 sliding tile puzzle by reducing it to a 2x2 puzzle

consider any 2x3 puzzle with tiles 1-5

claim A: we can always get to position with numbers in left column correct (1, 4)

claim B: after getting to that position, original problem solvable if and only if solving remaining 2x2 problem (while leaving left column in place) solvable

get to 1 * * [ how ? ]

* *

now where is 4? two cases

case 1: 1 * * done :)

4 *

case 2: 1 * * 1 4 * 1 * 4

* 4 * * * *

in each of these cases 1 * *

get to this * 4

then ... * * ... * * * ... 1 * *

1 * 4 1 4 4 *

end of proof :)

each tile move preserves the solvability condition

e.g. assume number of columns is odd

solvability condition: number of inversions is even

each tile move preserves parity of number of inversions (why?)

so original 2x3 position solvable if and only if position with 1,4 in place solvable

two cases

case: clockwise cyclic order of other three tiles is (2,3,5)

subproblem solvable (why?), original position solvable (why?), original position had even number of inversions (why?)

case: clockwise cyclic order of other three tiles is (2,5,3)

subproblem unsolvable (why?), original position has odd number inversions (why?) so unsolvable

for every complex problem there is an answer that is clear, simple, and wrong H.L. Mencken

simple sliding tile algorithm

for each tile in order, starting from first tile:

without moving any tiles already placed, slide next tile into place

simple 2x3 sliding tile algorithm

slide tile 1 into place

without moving tile 1, slide tile 2 into place

without moving tiles 1,2, slide tile 3 into place

without moving tiles 1,2,3, slide tile 4 into place

without moving tiles 1,2,3,4 slide tile 5 into place

correctness proof show algorithm solves problem for all inputs

correctness counterexample give input where algorithm does not work

is simple sliding tile algorithm correct?

give proof or counterexample

also

open problem: linear time?