Line and Plane based Incremental Surface Reconstruction

Junaid Ahmad

Supervisor: Martin Jägersand

Abstract

Simultaneous Localization and Mapping(SLAM) has been very popular in the past and is gaining more traction in the era of the autonomous vehicle research and robot manipulation. Computing accurate surface models from sparse Visual SLAM 3D point clouds is difficult. There have been works where this problem was addressed by space carving methods using map points and lines generated by those points. These methods come with their own drawbacks as point clouds and lines alone don’t add sufficient structural information to the scene.

In this thesis, we try to take the natural step to also compute and verify 3D planes bottom-up from lines. Our system takes the real-time stream of new cameras and 3D points from a SLAM system and incrementally builds the 3D scene surface model. In previous work, 3D line segments were detected in relevant keyframes and were fed to the modeling algorithm for surface reconstruction. This method has an immediate drawback as some of the line segments generated in every keyframe are redundant and mark similar objects(shifted) creating clutter in the map. To avoid this issue, we track the 3D planes detected over keyframes for consistency and data association. Furthermore, the smoother and better-aligned model surfaces result in more photo-realistic rendering using keyframe texture images. Compared to other incremental real-time surface reconstruction methods, our model has less than half the triangles, and we achieve better metric reconstruction accuracy on the EuRoC MAV Benchmarks. We also tested our method on various off-the-shelf cameras for better generalization.

Overview

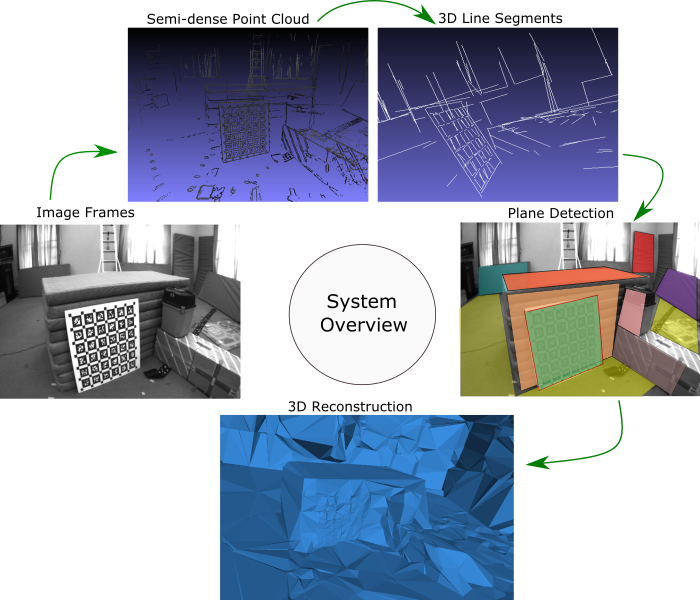

Previous methods rely on points and line segments to reconstruct 3D scenes in a real-time setup using 3D Delaunay Triangulation with visibility information from viewing rays. These systems use sparse point clouds to make the system work in real-time but the reconstruction quality is poor. Using semi-dense point clouds and lines provided more structural information to improve the reconstruction quality. In this thesis, we propose a plane-based approach to detect 3D planes in a scene for reconstructing actual 3D structures. The surfaces reconstructed by our method align more accurately with the real structures. We are also able to reduce the number of points fed to the system to make our modeling process faster.

Pipeline of our Proposed System

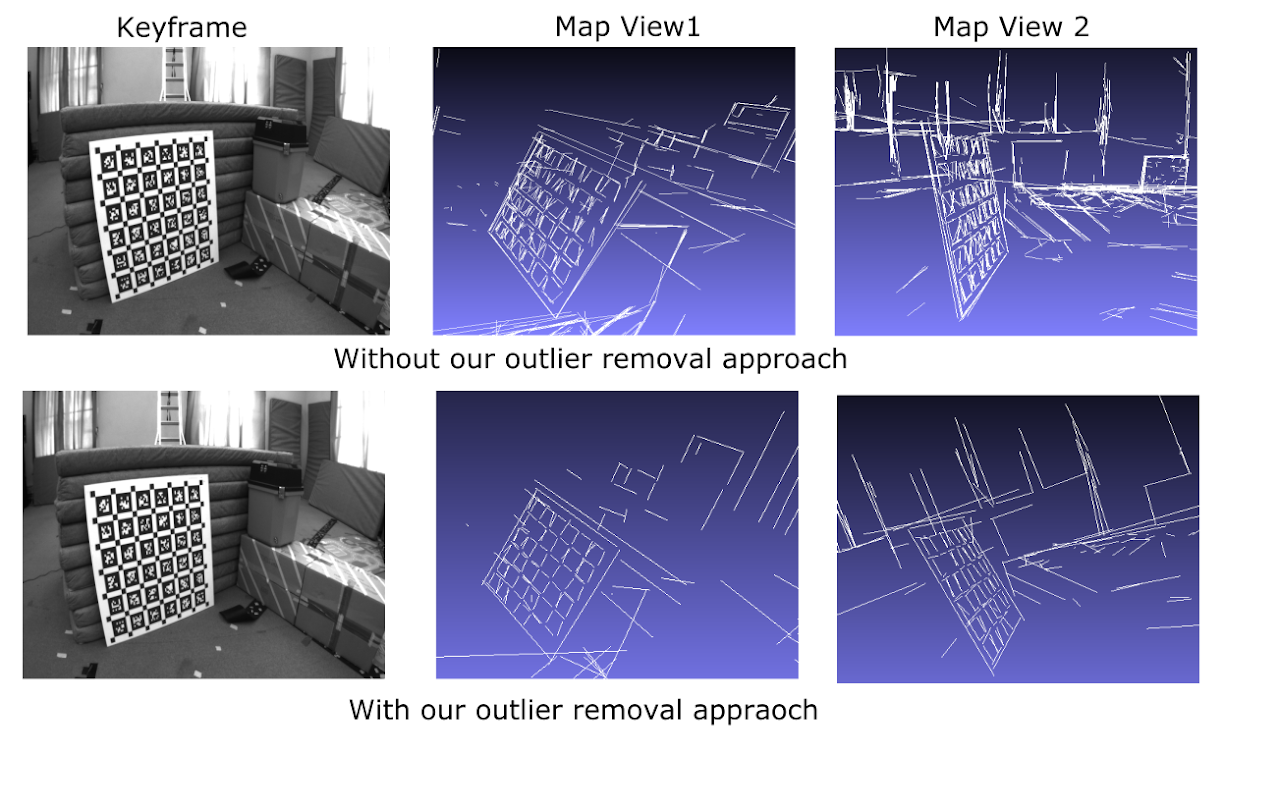

Outlier Removal

To detect accurate planes we need accurate 3D line segments from the scene. Previous methods detect a large number of 3D line segments with depth inconsistencies and do not represent real planar structures. We use a RANSAC-based approach to remove pixels that do not lie on the best-fit plane. Our method drastically reduces the number of lines detected in every keyframe, which would not be beneficial with algorithms that require a large number of lines or points to reconstruct surfaces but it can be very useful in detecting planar structures from the scene. The figure on the right compares our method against He et al.

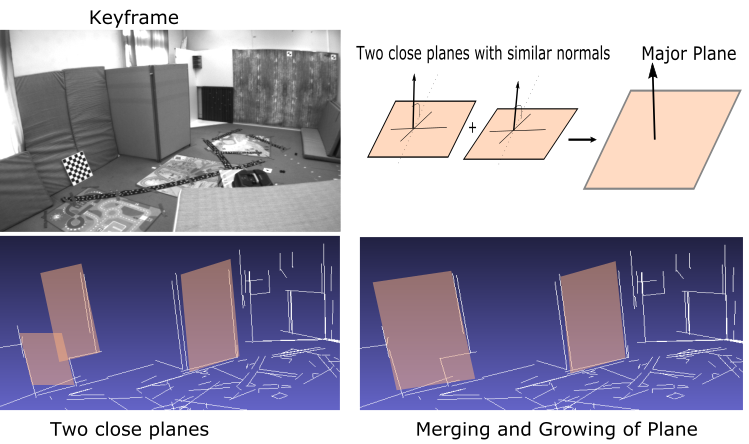

Plane Detection, Validation, and Merging

We perform an exhaustive search of planes from intersecting lines. These planes are validated based on the number of lines bounded by the plane. The planes are further merged to form major planar structures. The size of the planes is constrained to avoid seamlessly merging into larger planar regions like walls, and floors. These planes are matched in consecutive keyframes for consistency and data association. This drastically reduces the number of planes in the scene and the search space in every new keyframe. The figure on the left shows the merging of planes with similar normals and distances into a common major plane.

Experiments and Results

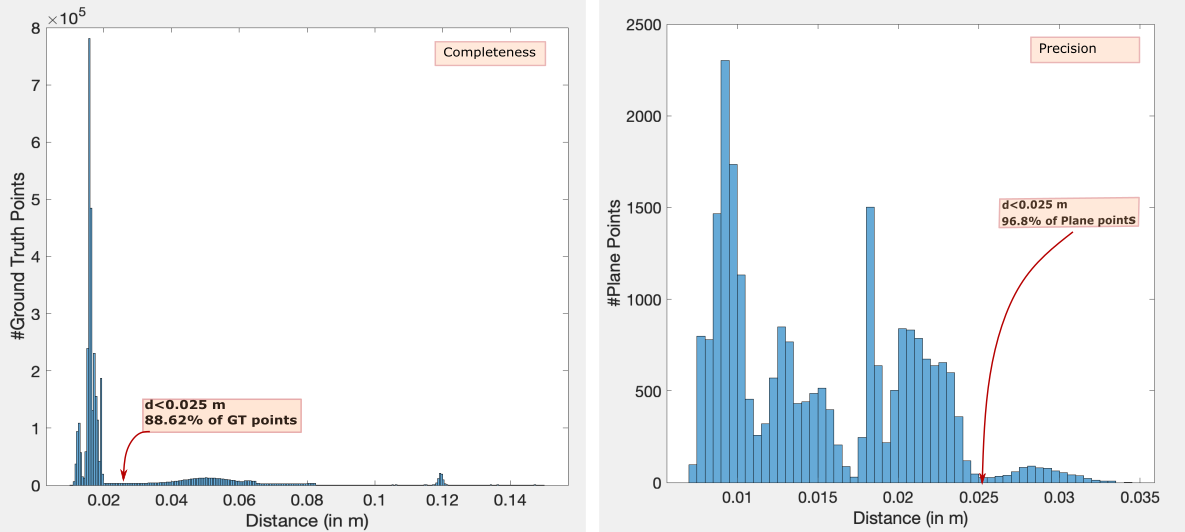

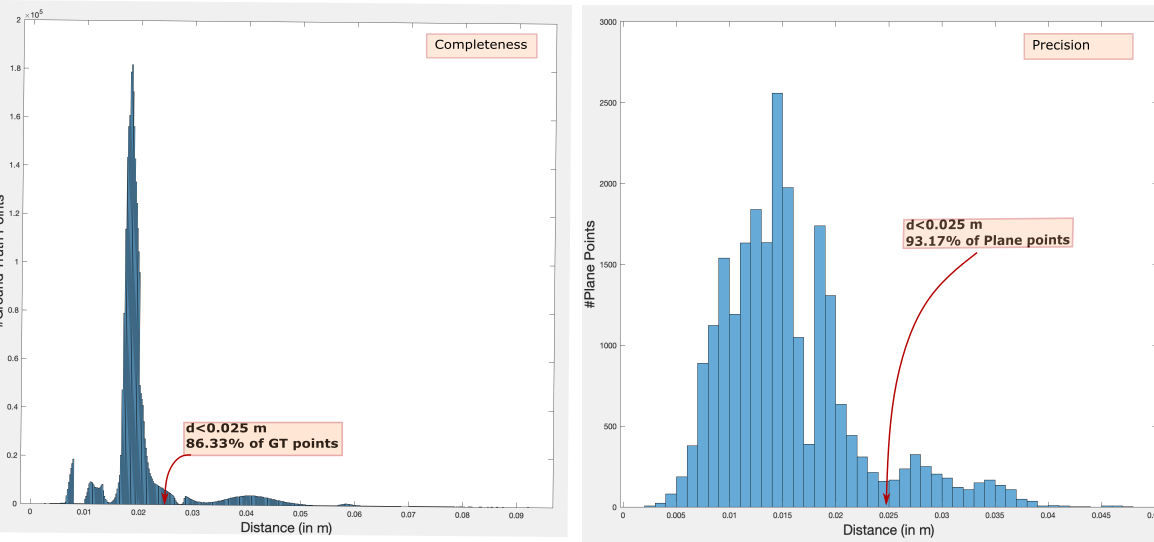

We compare our 3D reconstructed model with the point and line-based models based on two metrics: Completeness and Precision.

Completeness is the measure of the distance from the ground truth (GT) points to the 3D model and counts the percentage of ground truth points that is within 25 mm of the model. This metric tells us how close the ground truth points are to the reconstructed model. To evaluate this, we traverse through all the ground truth points and calculate the orthogonal distance to the nearest triangle of the mesh using barycentric coordinates.

Precision is the measure of the distance from each vertex in the computed triangulation to the ground truth scan. We report the fraction of points within 25 mm of the ground truth. Precision measure how good a method is at choosing accurate 3D model vertices for triangulation, but not how well the model generalizes over the whole scene.

Histogram of distance errors from Ground Truth for VR101 sequence

Histogram of distance errors from Ground Truth for VR201 sequence

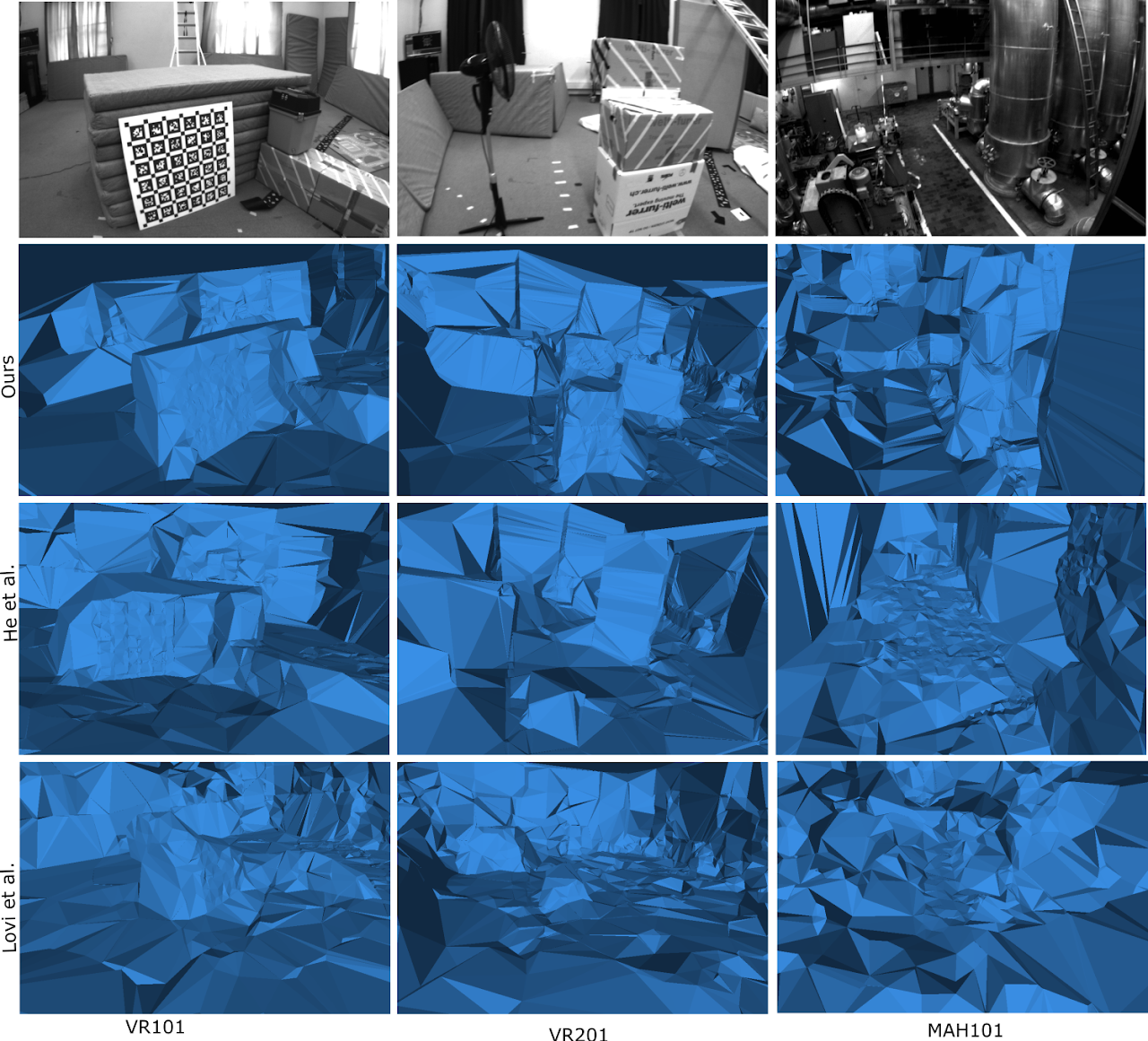

Qualitative Results

Experimental results on benchmarks EuRoC MAV Vicon Room 101, Vicon Room 201, and Machine Hall 01

We further used our system to run in a real-time setup organized in our lab. We choose this setup to motivate applications of our work in assistive robotics and predictive display for simple robotic manipulation tasks(e.g., pick-up and place tasks). Figure below shows the textured modeling results by our method from different views.

Textured Reconstruction of a 3D scene in a real-time setup. Rows 1 and 3 are the reference keyframes and Rows 2 and 4 show the reconstructed model from four different viewpoints

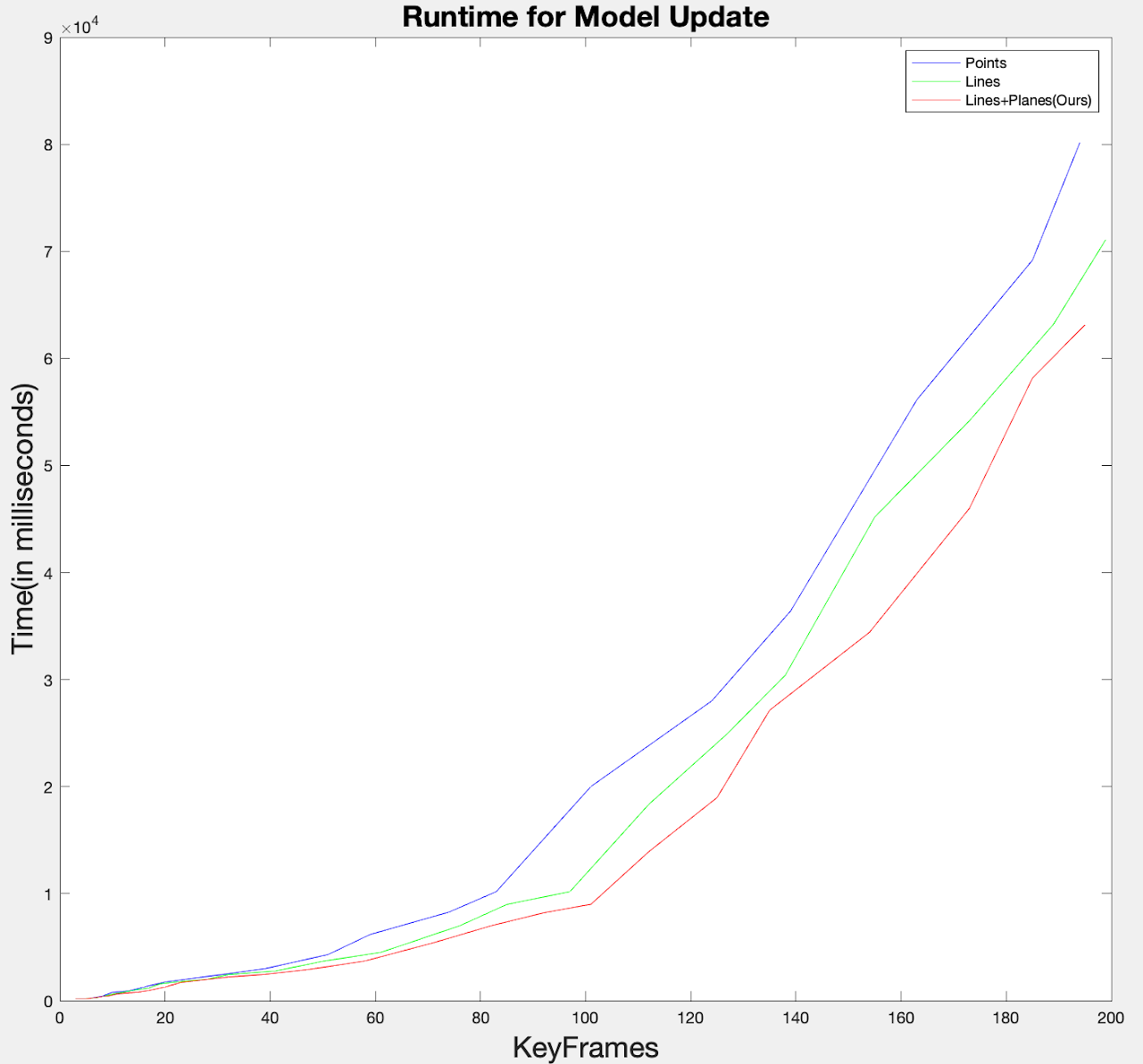

Runtime Complexity

Runtime Complexity of Point, Line and Plane(Ours) based surface reconstruction. The timestamp represents the average time(in milliseconds) taken for a model update at a Keyframe.(Blue) Point based Modeling (Green) Line based modeling (Red) Line+Plane based modeling(Ours).

CARV-Gazebo-Short.mp4

CARV-Gazebo-Short.mp4