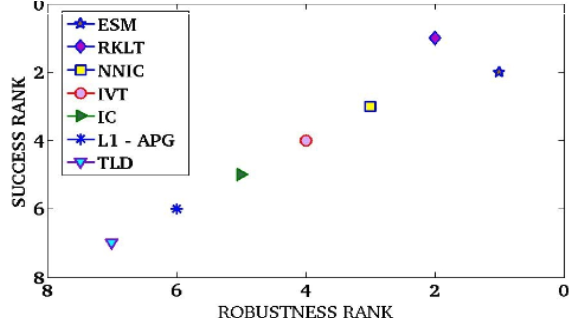

Overall Rank

Seven trackers are ranked in a diagram similar to the one done in VOT challenge. This gives the full picture of where each tracker lie with respect to others. Originally designed to test surveillance trackers in VOT, we modified the measure to use it for registration trackers. Relative ranks of trackers are assigned based on their "success rate" and "robustness". "Success Rate" is the total fraction of frames tracker within 5 pixel error \( e_{AL}\). Robustness on the other hand is defined by, how many times the tracker needs to be re-initialised to track the full sequence. A tracker is deemed to have failed once \( e_{AL} > 5\) pixel. Trackers when fail, are initialised 5 frames from the frame it has failed using positional co-ordinates from grouth truth data that we make publicly available. Finally the plot shows the global rank of trackers. Trackers towards the top right are the better compared to ones on bottom left. We observe that some of the popular trackers in literature like TLD and IVT are not so good when used in manipulation setup. The strict error threshold of 5 pixels and the error metric (\(e_{AL} \)) accounting for full pose alignment of the object makes this measure unique to evaluate registration based trackers. This makes it hard for the online learned trackers of TLD, IVT and L1-APG to have satisfactory performance metrices. These trackers update the appearance of the object dynamically. At times the online learned trackers include scaled, rotated, translated version of the original image template as part of the object representation. Also most of them compromise on state space, tracking lower DOF state space, to account for the computationally expensive learning models. Both of these factors result in lower convergence of these onlined learned trackers compared to the registration based trackers studied. |

Average Drift

Average Drift evaluates how closely the tracker converges with the Ground Truth data when tracking is considered to be successful (i.e. \( e_{AL} < 5 \) pixel, \( e_{AL}\) being the mis-alignment error). Mathematically it is expressed as \( E[e|e < t_p] = \frac{\sum_{f_i \in F} e_{AL}^{i}}{|S|} \) (Results are evaluated on medium speed sequences)

| Sequences | L1 | IVT | ESM | NNIC | BMIC | RKLT |

| Juice | 2.57 | 4.42 | 0.97 | 0.89 | 0.94 | 0.73 |

| Cereal | 4.66 | N/A | 0.61 | 0.61 | 0.15 | 0.63 |

| BookI | 2.95 | 4.92 | 0.73 | 0.73 | 0.70 | 0.92 |

| BookII | 2.68 | 4.17 | 0.70 | 0.70 | 0.73 | 0.82 |

| BookIII | 2.55 | 3.96 | 1.38 | 1.41 | 1.49 | 1.45 |

| Mugl | 1.53 | 3.95 | 0.49 | 0.49 | 0.46 | 0.50 |

| MugII | 1.81 | 2.40 | 1.85 | 1.87 | 1.88 | 1.87 |

| MugIII | 2.08 | 3.37 | 0.74 | 0.97 | 0.75 | 1.04 |

Registration based trackers converges better compared to the online learned trackers. Online learned trackers uses Particle Filter search. Particle filter initiates search from a random seed, which is unlikely to converge to the best allignment if number of particles are low. Another important reason is the online learned trackers update the apperance model as tracking goes on. This results in transformed version of the original template as part of the apperance model which may be different from the starting template.

NNIC is a cascade tracking system of an Approximate Nearest Neighbour (ANN) based search followed by Baker Matthew's Inverse Compositional Search. This explains the high convergence of the NNIC method compared to ESM. IC uses a Gauss Newton type optimzation which has quadratic convergence when residuals are low. NNIC uses ANN search to narrow the search space (this also makes the tracker robust to large motion). The IC part that follows aligns the template closely with the target image.

Speed Sensitivity and Overall Success

| Sequence | ||

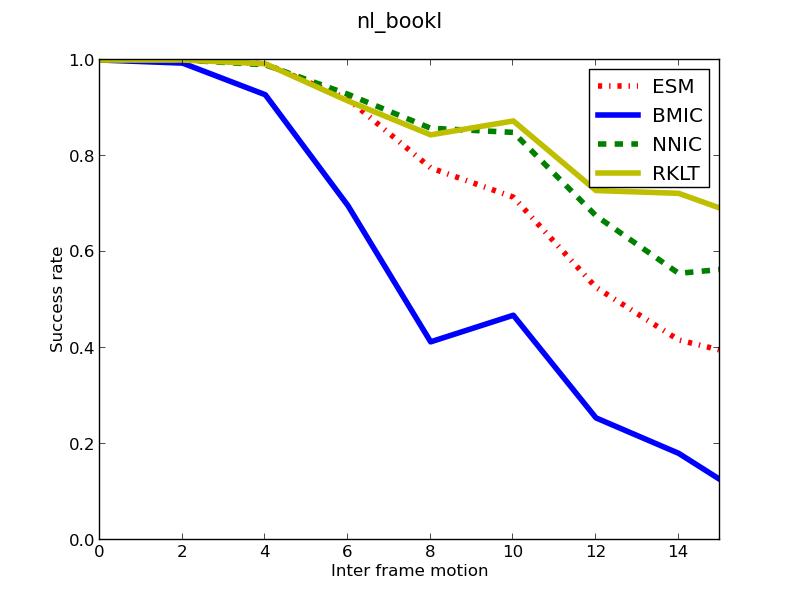

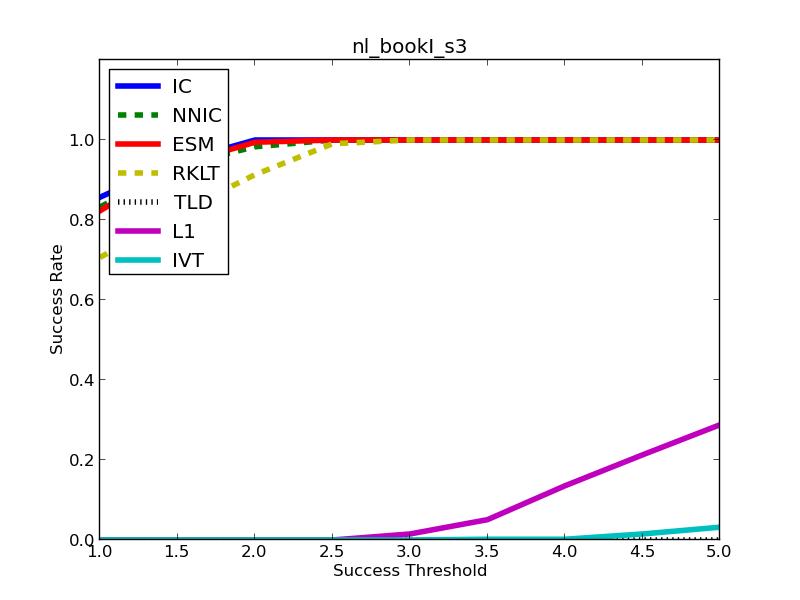

| BookI |

Approximate Nearest Neighbour based search is used in randomised KD-Tree. This makes the system robust to large motions compared to other Gauss Newton based approximation. The limitation being with low number of templates it is hard to model the transformation space prior to tracking. |

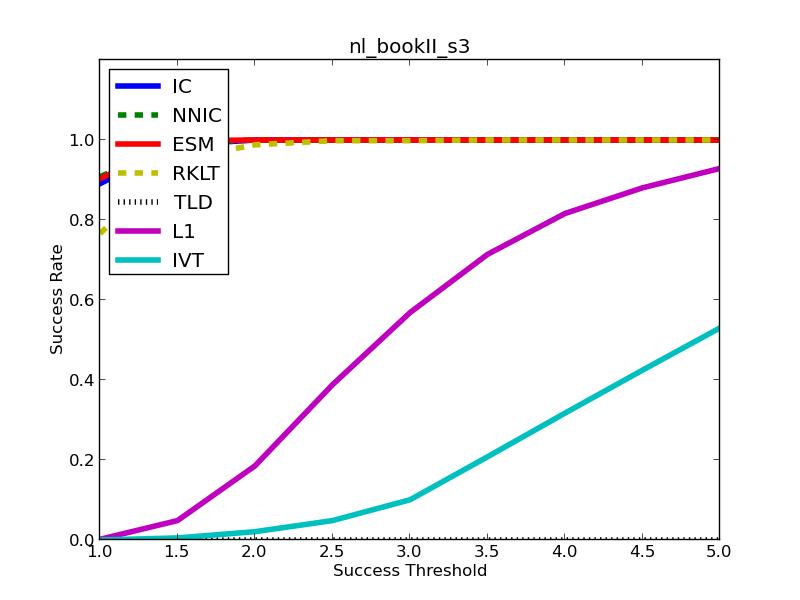

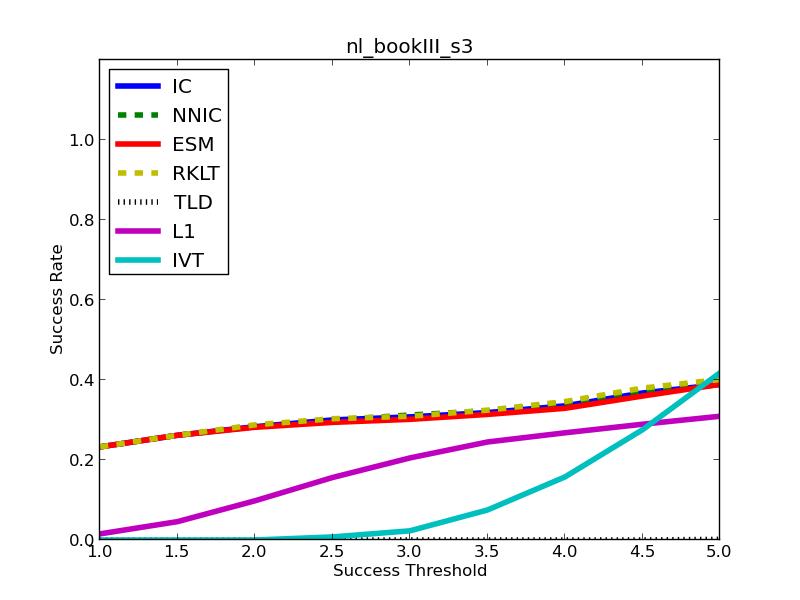

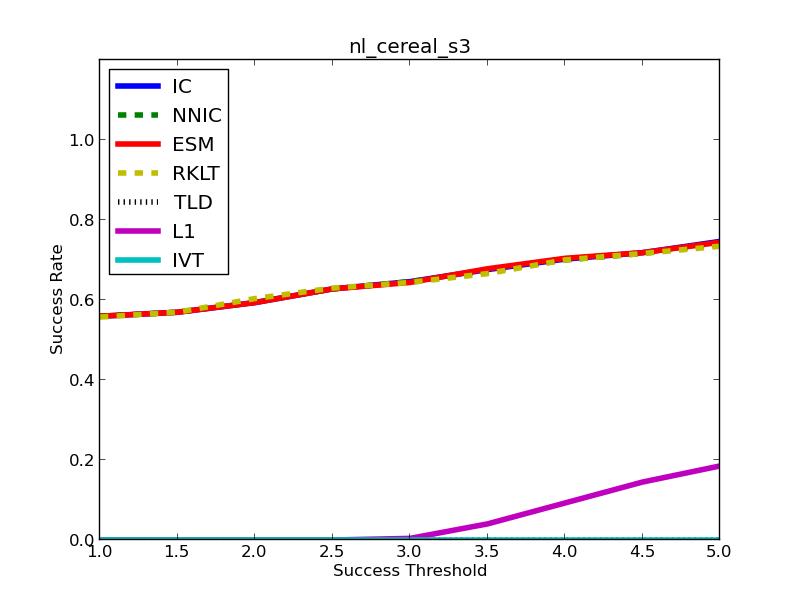

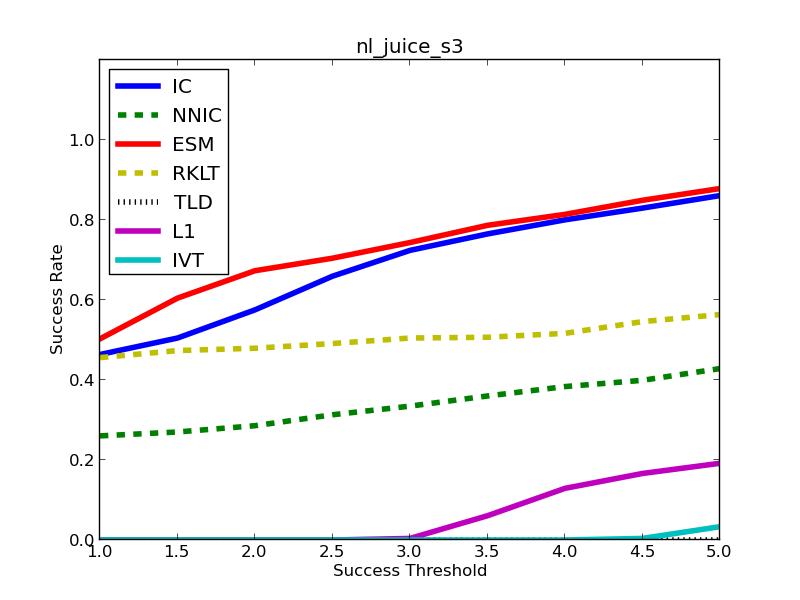

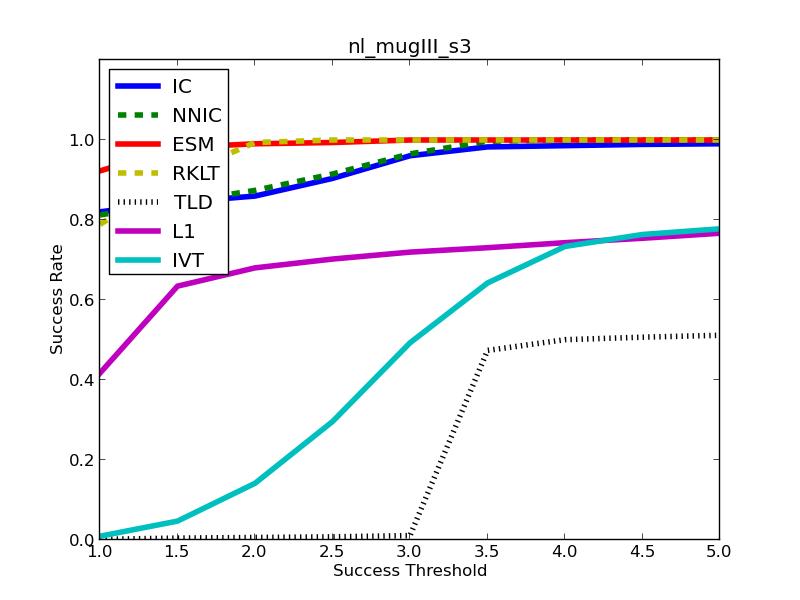

Overall Success is plotted with changing threshold \( t_p \) for medium speed. Note that as \( t_p \) reaches close to 5 pixels (the threshold we use to judge a tracker's success) NNIC, BMIC, ESM and RKLT converge close to 1. The three online learning based trackers having low DOF state space fail to track perspective transformation of the object. |

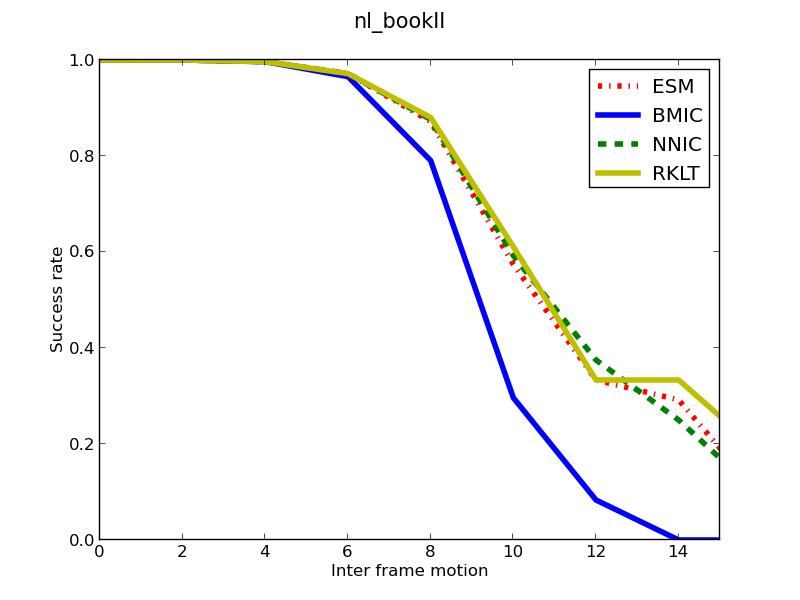

| BookII |

This sequence covers 3 DOF transformation of the object in translation and scale. All the three registration based trackers having full homography state space track well with the same observation that ESM, RKLT and NNIC track large motion better compared to BMIC. |

The online learned trackers in L1 and IVT model 6 DOF state space in their motion model tracks better compared to other sequences where it has higher DOF transformation of the object. |

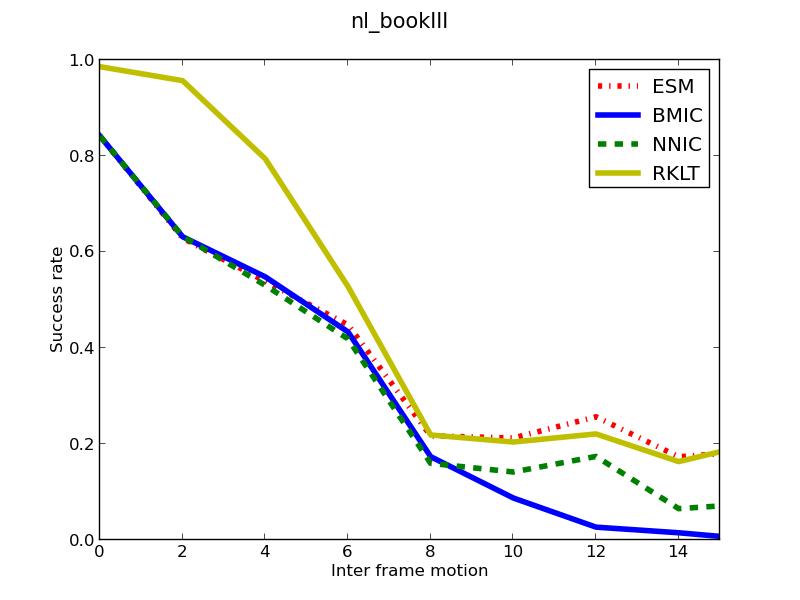

| BookIII |  This sequence present the challenge of occlusion. The book holder occludes part of the book when it is placed inside the holder. All the three registration based trackers fail to track in the presence of occlusion. |

The online learned trackers on account of updating the appearance model of the object in IVT and updating the subspace in L1 handle occlusion. |

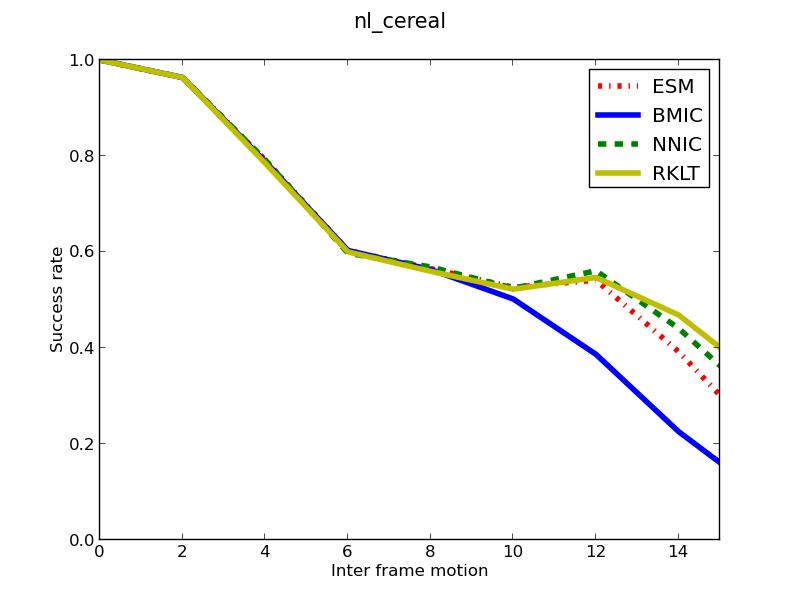

| Cereal |  |

|

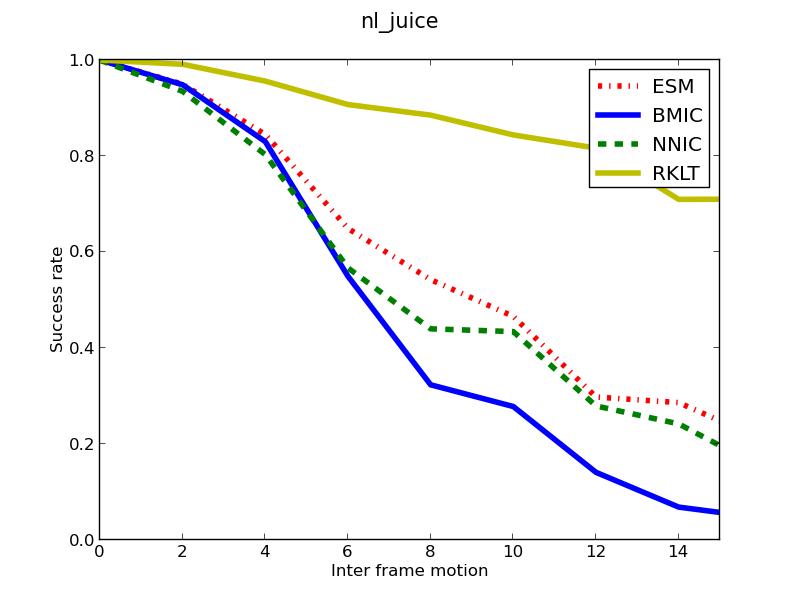

| Juice |

Even though the motion in the juice sequence is similar to that of the cereal box, the interframe motion graphs are different. One prominent difference is in the set up. The texture of the two objects are different. We ran static experiments, replacing the standard Lena image with the cereal and juice box template to confirm. Further analysis in provided in the Technical Report. |

Success plot show that NNIC is more susceptible to texture change than ESM. Success rates drop much more for the Juice sequence compared to the cereal sequence. This is further seen in the case of the Mug sequences (graphs shown below). |

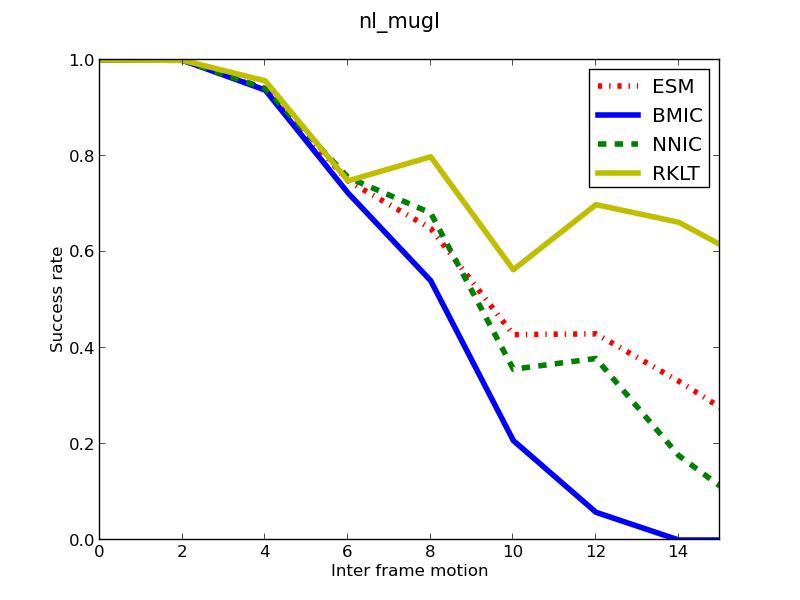

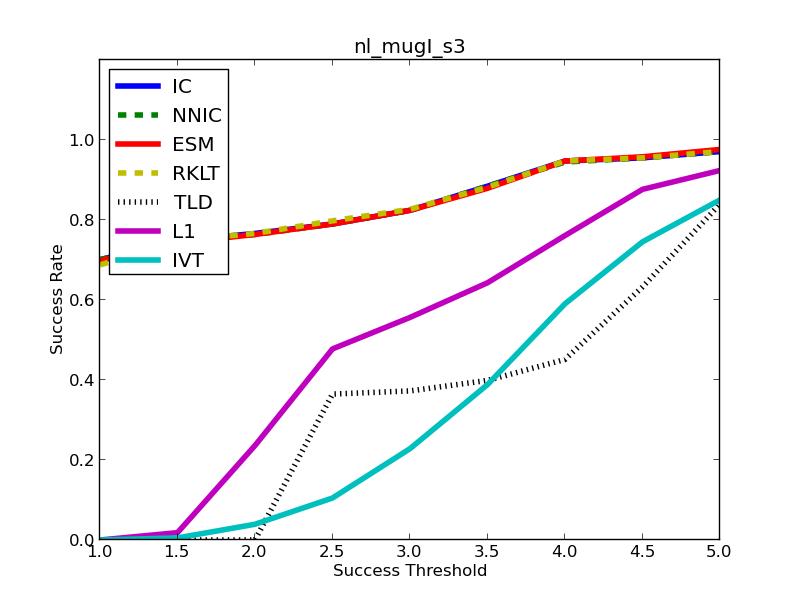

| MugI |

Low texture specular object is picked up and placed back on the table. The motion is pure translational but the appearance of the object makes it hard to track. |

All the trackers converge close to 1 at \( t_p \) = 5 pixels. This can be accounted for by the fact that the original motion of the object is pure translation, which all trackers successfully model in their motion model. |

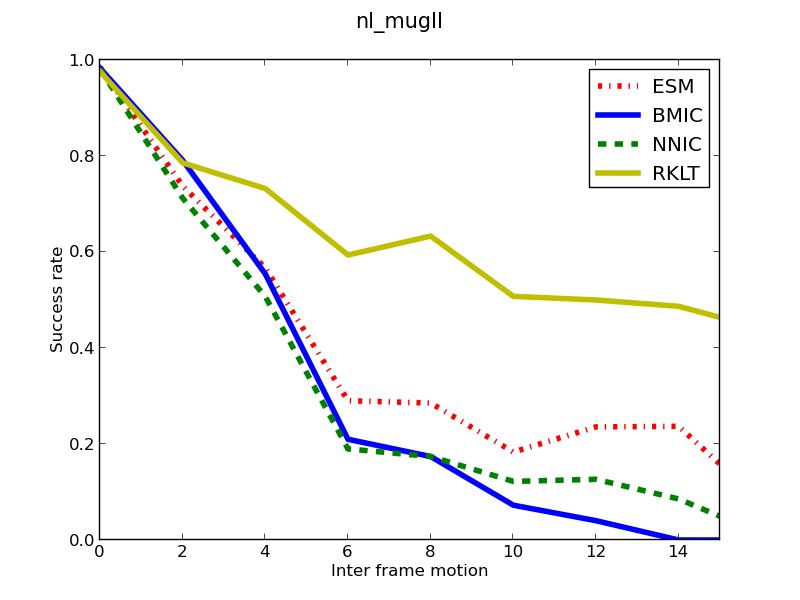

| MugII |  |

|

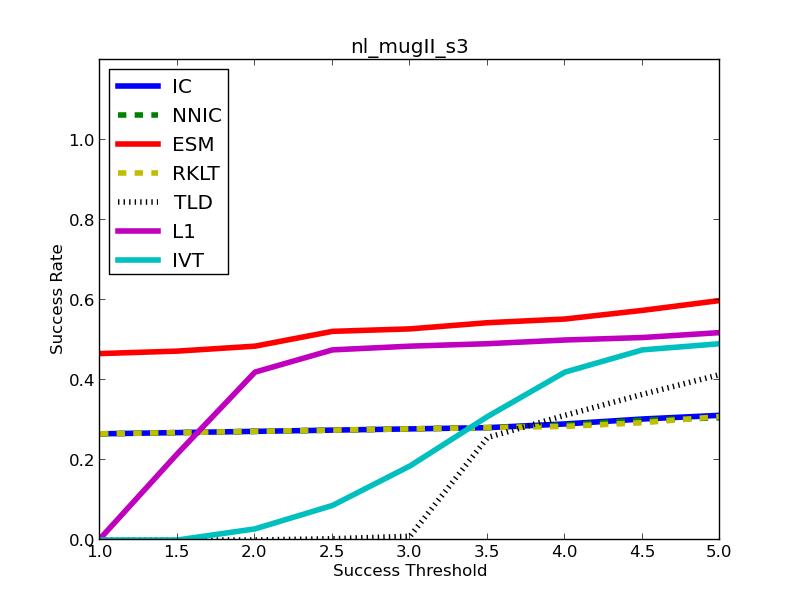

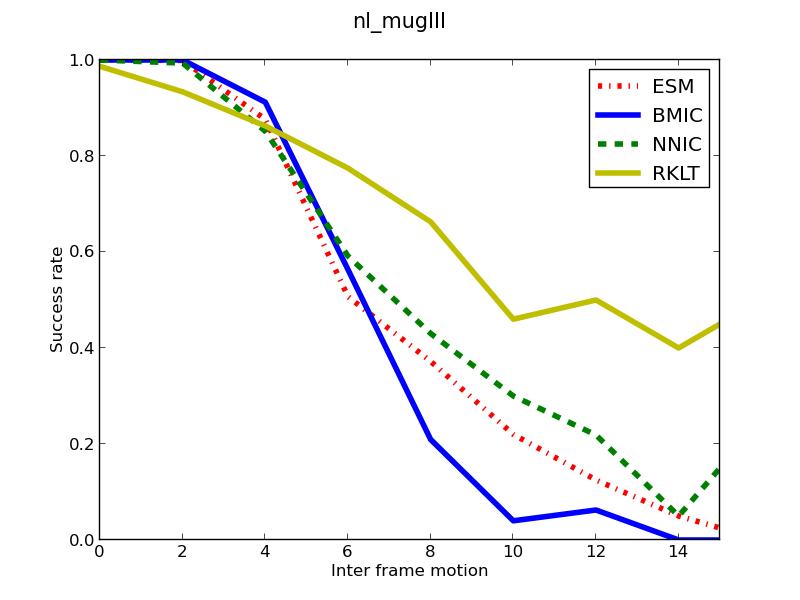

| MugIII |  |

|

NNIC is a cascade tracker that has two parts to it's search step. First, an Approximate Nearest Neighbour (ANN) followed by Baker Matthew's Inverse Compositional search. The way the ANN search is modelled is, warped templates are tagged with the warp parameters and stored in a KD-Tree. During tracking this tree is searched efficiently to give the closest matching template and it's corresponding warp.

This search takes place in the image space. This makes the approach sensitive to the texture of the object. The resultant warped templates of low textured objects vary less compared to highly textured objects. This leads to similar images tagged with different warps. On account of the search happening in the image space the updates are more likely to be incorrect if the texture of the object is low.

An evidence of this is seen in mug sequences. Success rate is lower compard to ESM for objects having low texture (mug) compared to high texture objects (Book). This can also be seen from objects having similar motions but varying in texture. The juice box has lower texture compared to ceral box. As a result success rate drops substancially for the case of NNIC while tracking identical motion.

References